What is a Large Language Model (LLM)?

Large language models are AI algorithms trained on massive text datasets to understand and generate human-like text. They power many applications, from chatbots to text summarization.

LLMs use deep learning techniques to predict the probability of the next word in a sequence, based on the preceding words. This allows them to generate coherent and contextually relevant text.

Popular LLMs include GPT-3, LaMDA, and others. These models are used in various applications like chatbots, machine translation, and content generation.

Understanding LLMs: A Comprehensive Guide

The quality and diversity of the training data significantly impact an LLM's performance and capabilities. Bias in the data can lead to biased outputs.

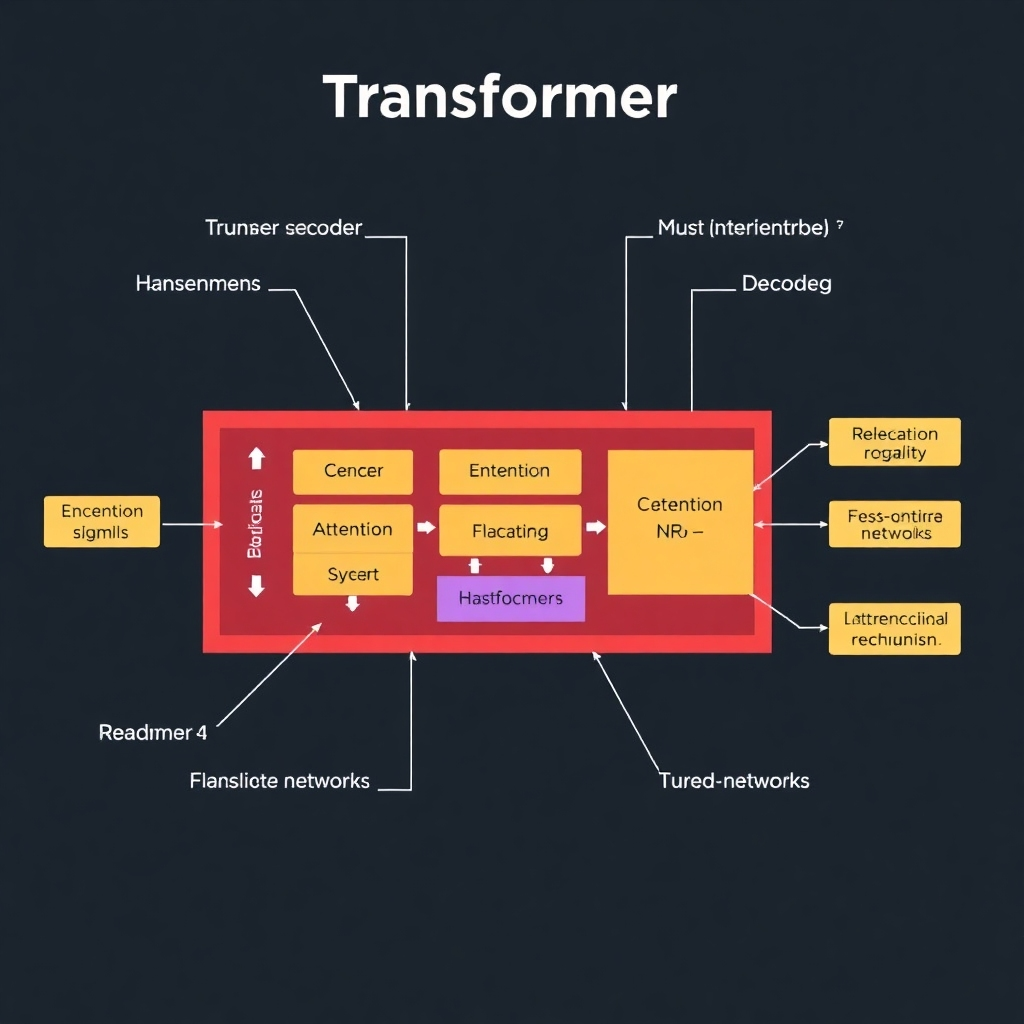

Different LLM architectures, like Transformers, influence their capabilities and efficiency. Understanding these architectures is key to grasping how they function.

LLMs are transforming various industries, from healthcare and finance to education and entertainment, through automation, improved efficiency, and enhanced decision-making.

LLMs Explained: Demystifying the Technology

LLMs represent a significant advancement in artificial intelligence, enabling machines to understand and generate human-like text with unprecedented accuracy.

Despite their capabilities, LLMs have limitations, including potential biases, susceptibility to adversarial attacks, and lack of true understanding.

Ongoing research and development continuously improve LLMs, expanding their capabilities and addressing their limitations. The future holds even more sophisticated and powerful language models.