What makes LLMs Powerful

**Massive Datasets:** The sheer scale of data used to train LLMs is a primary driver of their power. We're talking terabytes, petabytes, even exabytes of text and code. This massive corpus exposes the model to an incredibly diverse range of writing styles, vocabulary, grammatical structures, and contextual nuances. The breadth of this data allows the model to learn statistical relationships between words, phrases, and sentences far beyond what could be achieved with smaller datasets. This isn't just about memorization; the model learns to predict the probability of a word appearing given its context, capturing subtle semantic and pragmatic information. The diversity within these datasets is crucial; a model trained only on scientific papers will perform differently than one trained on a mix of news articles, books, code, and social media posts. The richness and variety of the training data directly translate to the model's ability to generate coherent, contextually relevant, and stylistically diverse text. Furthermore, the constant evolution of these datasets, with new data continually being added and refined, contributes to the ongoing improvement and adaptation of LLMs. The quality of the data is also a significant factor; datasets containing biases or inaccuracies will inevitably lead to models that reflect and potentially amplify those flaws. Therefore, careful curation and cleaning of the training data are essential to producing robust and reliable LLMs.

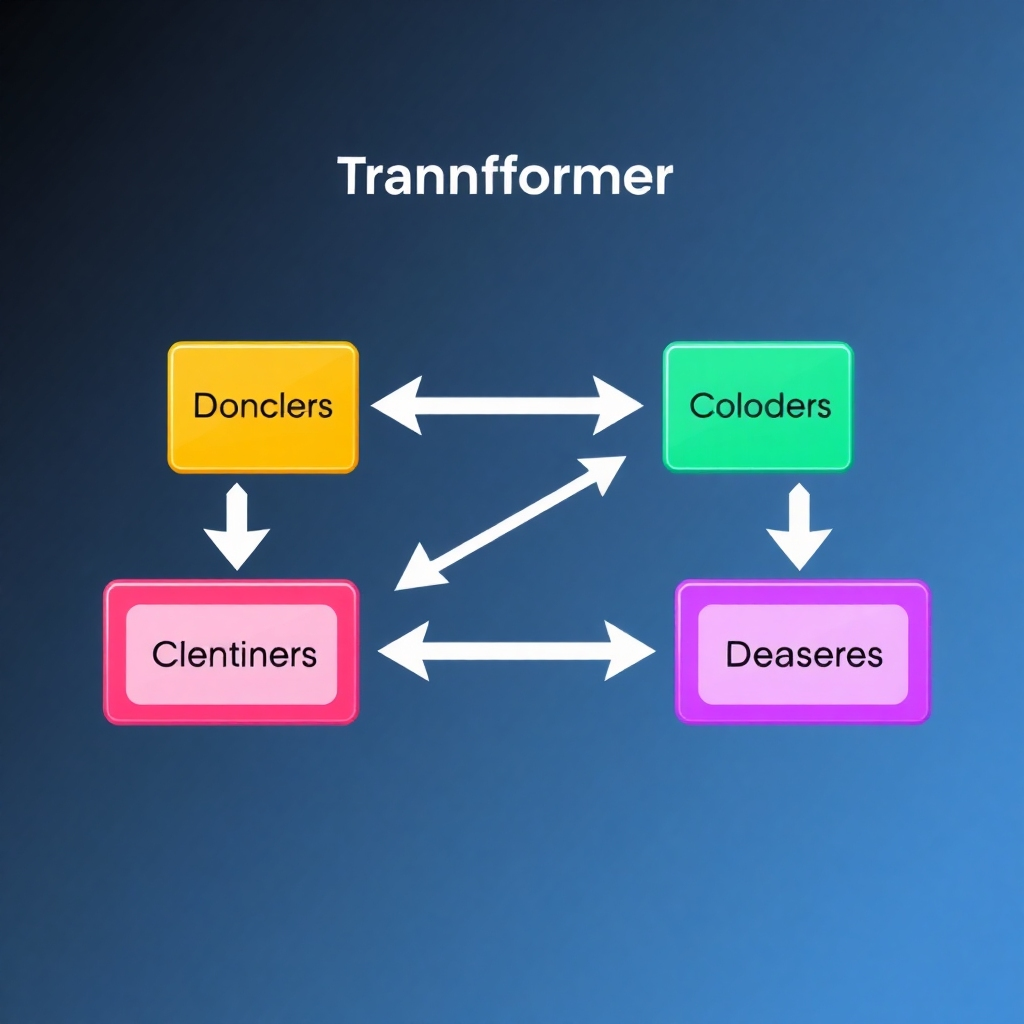

Transformer Architecture

The transformer architecture is a revolutionary breakthrough in deep learning that underpins the power of LLMs. Unlike recurrent neural networks (RNNs), which process information sequentially, transformers employ a mechanism called "self-attention." Self-attention allows the model to weigh the importance of different words in a sentence simultaneously, capturing long-range dependencies and relationships between words that are far apart in the sequence. This parallel processing capability is significantly faster than the sequential processing of RNNs, enabling the training of much larger models on much larger datasets. The self-attention mechanism allows the model to understand the context of each word in relation to all other words in the input sequence, leading to a more nuanced and accurate understanding of the text. The architecture also incorporates multiple layers of self-attention and feed-forward networks, creating a deep learning model capable of learning complex patterns and representations. The use of positional encodings within the transformer architecture allows the model to understand the order of words, a crucial aspect of language understanding that RNNs often struggle with. This ability to handle long-range dependencies is particularly important for tasks such as machine translation, text summarization, and question answering, where understanding the relationships between words across long spans of text is critical. The modular nature of the transformer architecture also allows for easy scalability, enabling researchers to build increasingly larger and more powerful models.

Transfer Learning

Transfer learning is a crucial technique that significantly amplifies the power of LLMs. The process involves pre-training a large language model on a massive dataset, allowing it to learn general language representations. This pre-trained model then serves as a foundation for fine-tuning on specific downstream tasks. Instead of training a model from scratch for each new task, transfer learning leverages the knowledge already acquired during pre-training, dramatically reducing training time and data requirements. This is particularly beneficial for tasks with limited data, as the pre-trained model provides a strong starting point, preventing overfitting and improving generalization. The pre-trained model acts as a powerful feature extractor, learning rich representations of words, phrases, and sentences that are transferable across various tasks. Fine-tuning involves adjusting the weights of the pre-trained model on a smaller dataset specific to the target task, allowing the model to adapt its knowledge to the nuances of that particular application. This approach is incredibly efficient and effective, enabling the development of high-performing models for a wide range of applications, from chatbots and language translation to code generation and question answering. The ability to adapt a general-purpose model to specific tasks through fine-tuning is a key factor in the versatility and widespread applicability of LLMs. Furthermore, transfer learning allows for the exploration of diverse tasks without the need for extensive data collection and training from scratch for each.

Scaling Laws

The power of LLMs isn't solely determined by the architecture or the data; it's also significantly influenced by scaling laws. Research has shown a strong correlation between model size (number of parameters), dataset size, and computational resources used during training, and the resulting performance of the model. Larger models trained on larger datasets generally exhibit better performance on a wide range of tasks. These scaling laws suggest that there's a continuous improvement in performance as we increase these factors, although the returns may diminish at some point. Understanding and leveraging these scaling laws is crucial for designing and training more powerful LLMs. This involves not only increasing the size of the models and datasets but also optimizing the training process to effectively utilize the available computational resources. The exploration and refinement of scaling laws continue to be a major area of research in the field of LLMs, with ongoing efforts to understand the optimal balance between model size, data size, and computational cost to achieve maximum performance. This understanding is critical for the responsible and efficient development of increasingly powerful and capable LLMs.

Architectural Innovations

Beyond the core transformer architecture, ongoing research continues to explore and refine various architectural innovations to further enhance the capabilities of LLMs. These include improvements to attention mechanisms, such as sparse attention and linear attention, which aim to reduce computational complexity while maintaining performance. The exploration of different activation functions, normalization techniques, and optimization algorithms also contributes to the development of more efficient and effective models. Furthermore, the integration of other neural network architectures, such as convolutional neural networks (CNNs) or graph neural networks (GNNs), alongside transformers, is being explored to potentially enhance the model's ability to handle specific types of data or tasks. These architectural innovations, coupled with advancements in training techniques and hardware, contribute to the continuous evolution and improvement of LLMs, leading to increasingly powerful and versatile models.